Everything you need to know about the evaluating training effectiveness. Evaluation means the assessment of value or worth. Evaluation of training is the act of judging whether or not it is worthwhile in terms of set criteria.

Evaluation of training and development programmes provides assessment of various methods and techniques, sells training to management, identifies the weaknesses of training programmes and helps to accomplish the closest possible correlation between the training and the job.

Hamblin (1970) defined evaluation of training as – “Any attempt to obtain information (feedback) on the effects of training programme and to assess the value of training in the light of that information for improving further training”.

Some of the approaches and models to evaluate effectiveness in training are:-

ADVERTISEMENTS:

1. Hamblin’s Level of Evaluation 2. Warr’s Framework of Evaluation 3. Kirkpatrick’s Model 4. Virmani and Premila’s Model 5. Bell System Approach 6. The Parker Approach 7. Jack Philip’s ROI Method 8. Stufflebeam’s CIPP Model 9. Warr, et al.’s CIRO Model.

How to Evaluate Training Effectiveness?

Evaluating Training Effectiveness – Kirkpatrick’s Model (With the Criteria, Methods of Data Collection for Evaluation and Reasons of Training Failure)

It is necessary to evaluate the existing training facilities and to measure their effectiveness. The purpose of training evaluation is to determine the ability of the participant in the training programme to perform the job for which they were trained.

The specific deficiencies while on the job training need to be removed safely and effectively. The concept of evaluation is most commonly interpreted in determining the effectiveness of the programme in relation to its objectives. The evaluation should be clear about why the individual has been asked to evaluate the training.

Evaluation of training programme may be carried out –

ADVERTISEMENTS:

a) To increase effectiveness of the training programme while in progress.

b) Implement measure to increase effectiveness of the programme for the next time.

c) To get the feedback for the improvement and efficiency of participant.

d) To know up to what extent, the training objectives are achieved.

ADVERTISEMENTS:

There are mainly four types of reactions of the participants of the training that can be properly evaluated.

i) Reaction means how the trainee had responded to the training imparted to him. For example, did he like the programme or did he feel anything substantial to be useful to execute his job.

ii) Learning – Did the trainee took any interest in learning principles, skills and technique.

iii) Behaviour change – Did the training make any impact on trainees which could make a behavioural change?

ADVERTISEMENTS:

iv) What final results could be achieved? Had this helped them increase their work experience? Did trainees learn how to operate machines? Is there any improvement in curtailing wastage of materials in the process of ‘Production’ before and after training?

While evaluating effectiveness any training programme; irrespective of the method adopted, the following points should always be kept in mind:

i. Objective

ii. Cost benefit analysis

ADVERTISEMENTS:

iii. Flexibility

iv. Result obtained

v. Staff required

vi. Whether improvement possible.

Criteria of Evaluation:

ADVERTISEMENTS:

Evaluation of training effectiveness is the process of obtaining information on the effects of a training program and assessing the value of training in the light of that information. Evaluation involves controlling and correcting the training program. The basis of evaluation and mode are determined when the training program is designed.

According to Hamblin, training effectiveness can be measured in terms of the following criteria:

(i) Reactions – A training program can be evaluated in terms of the trainees’ reactions to the objectives, contents and methods of training. In case the trainees consider the program worthwhile and like it, the training can be considered effective.

(ii) Learning – The extent to which the trainees have learnt the desired knowledge and skills during the training period is a useful basis of evaluating training effectiveness.

ADVERTISEMENTS:

(iii) Behaviour – Improvement in the job behaviour of the trainees reflects the manner and extent to which the learning has been applied to the job.

Methods of Data Collection for Evaluation:

Several methods can be employed to collect data on the outcomes of training.

Some of these are:

(i) Opinions and judgements of trainers, superiors and peers,

(ii) Asking the trainees to fill up evaluation forms,

(iii) Using a questionnaire to know the reactions of trainees,

ADVERTISEMENTS:

(iv) Giving oral and written tests to trainees to ascertain how far they have learnt,

(v) Arranging structured interviews with the trainees,

(vi) Comparing trainees performance on-the-job before and after training,

(vii) Studying profiles and career development charts of trainees,

(viii) Measuring levels of productivity, wastage, costs, absenteeism’s and employee turnover after training, and

(ix) Trainees’ comments and reactions during the training period.

ADVERTISEMENTS:

After the evaluation, the situation should be analysed to identify the possible causes for a difference between the expected outcomes and the actual outcomes. Necessary precautions should be taken in designing and implementing program should justify the time, money and efforts invested by the organisation in training.

Information collected during evaluation should be provided to the trainees and the trainers as well as to others concerned with the designing and implementation of training programs. Follow-up action is required to ensure implementation of evaluation report at every stage.

Kirkpatrick’s Model of Evaluating Training Effectiveness:

American Society of Training and Development (ASTD) published the article of Kirkpatrick which was related with the evaluation of effectiveness of training program. His four level evaluation model is widely accepted by the industry.

The four levels of Kirkpatrick’s evaluation model measure:

1. Reaction of participants (after just completion of training program).

ADVERTISEMENTS:

2. Learning of participants (the resulting increase in applied knowledge).

3. Behaviour of participants (improvement in working style).

4. Results (the effect of training on business).

To evaluate effectiveness of training, the concerned managers should begin by measuring reactions of the trainees. If the trainer can prove effectiveness of training in terms of learning as well as in terms of reactions, he has objective data in hand for setting future program and in increasing his status and position in the company.

Evaluation of job-behaviour is more difficult than the first two levels and needs a more scientific approach. The number of variables operating at the level of the results renders the process of evaluation more complicated; sometimes it needs segregation of factors, other than training, influencing the training results.

1. Reaction of Participants:

ADVERTISEMENTS:

At the first level, the aim is simply to assess participant’s reactions to the training and development program. For this purpose the participants are asked to evaluate the training after completing the program. This type of evaluation can reveal valuable data if the questions asked move beyond how well the participants liked the training.

The questions should relate to:

(i) The relevance of the training objectives.

(ii) The ability of the trainers to maintain interest.

(iii) The amount and appropriateness of interactive exercises.

(iv) The perceived value and transferability of knowledge and skills to the workplace.

ADVERTISEMENTS:

Such evaluation should be conducted through an attitude survey and should not generally be administered by the evaluators directly. Participants are unlikely to provide candid answers at this point even if the questionnaires are anonymous. As an aid to this type of evaluation, participants can be allowed to test themselves on the training content.

The advantages of such evaluation are as follows:

(i) It is easy to conduct evaluation of reactions.

(ii) It is less time consuming and economical.

(iii) Trainers may come to know about the feelings of participants easily.

2. Learning of Participants:

Learning objectives are related to the contents of the training and they define what the participants should be able to do or know when training is completed. Learning objectives are usually developed for each part of the training content and are shared with the participants so that they know what they should expect to learn. Evaluation at this level is designed to assess whether or not the participants have learnt what is desirable.

Testing participants at the end of the course can do this. Knowledge, concepts and abstract skills can be assessed through written tests whereas practical skills can be assessed by direct observation of the participants demonstrating the skill. Where this level of evaluation is used, it is absolutely necessary to have prior information of the knowledge and skills baseline of the participants before training begins.

3. Behaviour of Participants:

The third level of evaluation is the assessment of whether or not the knowledge and skills learned in the training and development program are actually being applied on the job. Such assessment can be made through direct observation at specified intervals of time following training.

Evaluation of application on the day following training may be quite different from that based on an evaluation some time later, say, three months later. It is important to note, however, that if the evaluation shows a lack of application after the specified period, it may not be the training itself, which is defective; it may be due to a lack of reinforcement at the workplace itself.

Ideally, measurement is conducted three to six months after the training and development program. By allowing some time to pass, participants have the opportunity to implement new skills. Observation surveys, sometimes called behavioural scorecards are used to collect data. The participants and their supervisors can complete surveys.

4. Results Achieved:

Top level management is always interested in the return on investment (i.e., financial gain vs. investment in terms of money invested on the training). So the HR department tries to quantify the “effects” on business due to increased level of applied knowledge/behaviour. This is the fourth level of Kirkpatrick model which is intended to evaluate the impact of the training and development program.

The only scientific way to isolate training, as a variable, would be to isolate a representative control group within the larger participant population, and then roll out the training and development program, complete the evaluation, and compare against an evaluation of the non-trained group.

Some examples of assessing results of training and development programs are as under:

i. For technical training, measure the reduction in calls to the help desk; reduced time to complete reports, forms, or tasks; or improved use of softwares or systems.

ii. For quality training, measure the reduction in number of defects.

iii. For safety training, measure the reduction in number or severity of accidents.

iv. For sales training, measure the change in sales volume, customer retention, length of sales cycle, and profitability on each sale after the training program has been implemented.

Ideally, all the four levels of training evaluation should be built into the training design and implementation. However, if only one level is used, its limitations should be clearly understood by all concerned. But where training is designed and provided by an external agency, the organisation can and should nevertheless evaluate its potential usefulness by applying predetermined criteria.

Reasons of Training Failure:

There are so many factors that contribute to failure of training programme.

According to Burack and Smith, the following factors contribute to failure of a training programme:

i) Benefits of training are not clear to top management.

ii) Top management seldom rewards superiors for carrying out effective training programme.

iii) Top management seldom plans and projects essentials systematically for successful training.

iv) Middle management without proper incentives does not include training in the production schedule.

v) Without proper scheduling from superiors, first line supervisors have different production norms if employees are not attending training programmes.

vi) Behavioural objectives are often precise

vii) Training external to the employing unit same time teaches techniques on methods contrary to practices of the participants organisation.

viii) Timely information about external programmers may be difficult to obtain.

ix) Trainers provide limited counselling and consulting services to the rest of the organisation.

Training effectiveness is the degree to which trainees are able to learn and apply the knowledge and skills acquired in the training program. It depends on the attitudes, interests, values of the trainees and the training environment.

A training program is likely to be effective when the trainees want to learn, are involved in their jobs, and have career goals. Contents of a training program, and the ability and motivation of trainers also determine training effectiveness.

It is necessary to evaluate the extent to which training programs have achieved the aims for which they were designed. Such an evaluation would provide useful information about the effectiveness of training as well as about the design of future training programs. Evaluation enables an organisation to monitor the training program and also to update or modify its future programs of training.

The evaluation of training results or consequences also provides useful data on the basis of which relevance of training and its integration with other functions of human resources management can be judged.

Evaluating Training Effectiveness – Hamblin’s Level of Evaluation, Warr’s Framework of Evaluation, Kirkpatrick’s Model, Bell System Approach and a Few Others

To verify a programme’s success, HR managers increasingly demand that training and development activities be evaluated systematically. A lack of evaluation may be the most serious flaw in most training and development efforts. There are many reasons for this neglecting activity – Firstly, many training directors do not have the proper skills to conduct a rigorous evaluation research.

Secondly, some managers are just reluctant to evaluate something which they have already convinced themselves is worthwhile. Thirdly, some of the organizations are involved in training, not because it is necessary but simply because their competitors are doing it or the unions are demanding it.

Fourthly, as training itself is very expensive, the organizations do not want to spend even a penny on the evaluation. Fifthly, some of the training programmes are very difficult to evaluate because the behaviour taught is itself very complex and ambiguous.

Evaluation means the assessment of value or worth. Evaluation of training is the act of judging whether or not it is worthwhile in terms of set criteria. Evaluation of training and development programmes provides assessment of various methods and techniques, sells training to management, identifies the weaknesses of training programmes and helps to accomplish the closest possible correlation between the training and the job.

A comprehensive and effective evaluation plan is a critical component of any successful training programme. It should be structured to generate information of the impact of training on the reactions; on the amount of learning that has taken place; on the trainees’ behaviour; and its contribution to the job/organization.

Therefore, evaluation is a measure of how well training has met the needs of its human resources. An index of contribution of training to organizational success through evaluation strengthens training as a key organizational activity.

Hamblin (1970) defined evaluation of training as – “Any attempt to obtain information (feedback) on the effects of training programme and to assess the value of training in the light of that information for improving further training”.

Evaluation of training could be multipurpose.

It is necessary in order to determine:

i. If the developmental objectives were achieved.

ii. The effectiveness of the methods of instruction.

iii. If the best and most economical training activities were conducted.

iv. The suitability and feasibility of the objectives set for training.

v. To enable improvements in the assessment of training needs.

vi. To provide feedback on the performance of the trainees, and training staff, the quality of training, other facilities provided during training.

vii. To aid the learning process of the trainee by providing knowledge of results.

viii. To highlight the impact of training on the behaviour and performance of the individual.

ix. To Judge the impact of training for organizational benefits.

Suchman, E.A. describes evaluation as an integral part of an operating system meant to aid trainers/training managers to plan and adjust their training activities in an attempt to increase the probability of achieving the desired action or goals.

Different typologies of evaluation have been described by various authors; while some differ in terms of the actual design others are a mere change of terminology. A particular methodology appropriate for in-company training may not be relevant for external programmes. An evaluation design may be applicable from one organization to another; one situation to another, while the results are not. It is, therefore, necessary that the evaluation design is tailor-made to suit the situation within the broad framework of seeking to assess –

(i) What needs to be changed/modified/improved?

(ii) What procedures are most likely to bring about this change?

(iii) Is there demonstrable and concrete evidence that change has occurred?

1. Hamblin’s Level of Evaluation:

The processes which occur as a result of a successful training programme can be divided into four levels.

Evaluation can be carried out at any of these levels:

i. The Reaction Level – Trainees react to the training (form opinions and attitudes about the trainer, the method of presentation, the usefulness and interest of the subject matter, their own enjoyment and involvement, etc.)

ii. The Learning Level – Trainees learn (acquire knowledge, skills and attitudes about the subject matter of the training, which they are capable of translating into behaviour within the training situation).

iii. The Job Behaviour Level – Trainees apply this learning in the form of changed behaviour back on the job.

iv. The Functioning Level – (Efficiency and Costs) – This changed job behaviour affects the functioning of the firm (or the behaviour of individuals other than the trainees). These changes can be measured by a variety of indices, many of which can be expressed in terms of costs.

Hamblin’s four levels form a cause-and-effect chain linking the training effects as follows:

According to Hamblin we can evaluate at any of these 4 levels but ideally we should at every level. According to Hamblin, objectives of training should be set at each of the four levels. Evaluation data at any of these four levels can be gathered during, and immediately or at a specified period, after training, and it should be preferably compared with information on before training situation.

2. Warr’s Framework of Evaluation:

It is developed by Peter, Warr, Michael, Bird and Neil Rackham. CIRO stands for context, input, reaction, and outcome.

i. Context Evaluation – Obtaining and using information about the current operational context, that is, about individual difficulties, organizational deficiencies, and so on. In practice, this mainly implies the assessment of training needs as a basis for decision.

ii. Input Evaluation – Determining and using factors and opinion about the available human and material training resources in order to choose between alternative training methods.

iii. Reaction Evaluation – Monitoring the training as it is in progress. This involves continuous examination of administrative arrangements and feedback from trainees.

iv. Outcome Evaluation – Measuring the consequences of training.

Three levels of outcome evaluation may be distinguished:

i. Immediate outcomes – Changes in trainees’ knowledge, skills and attitudes which can be identified immediately after the completion of training.

ii. Intermediate outcomes – The changes in trainees actual work behaviour which result from training.

iii. Long-term outcomes – The changes in the functioning of part or all of the organization which have resulted from changes in work behaviour originating in training.

3. Kirkpatrick’s Model:

In this model, he developed a conceptual framework to assist in determining what data are to be collected.

His model calls for four steps of evaluation, and answer four very important questions:

Step 1 – Reaction – How well did the trainee like the programme? Were the participants pleased with the programme?

Step 2 – Learning – What did the participants learn (principles, facts, techniques) in the programme?

Step 3 – Behaviour – Did the participants change their job-behaviour based on what was learned in the programme?

Step 4 – Results – Did the change in behaviour positively affect the organization in terms of reduced cost, improved quality etc.?

To evaluate effectively training managers should begin by doing a good job of measuring reactions. If the trainer can prove effectiveness of training in terms of learning as well as in terms of reactions, he has objective data in hand for selling future programme and in increasing his status and position in the company.

Evaluation at the job-behaviour is more difficult than the first two levels and needs a more scientific approach. The number of variables operating at the level of results renders the process of evaluation more complicated; sometimes it needs segregation of factors other than training, influencing the training results.

4. Virmani and Premila’s Model:

According to them, training constitutes a three stage system. The first stage is the period before training during which the trainees have expectations from the course. The second is the teaching and learning stage and the third is after training when back on the job, the trainee is supposed to integrate training with his job performance.

i. Pre-Training Evaluation:

To ensure maximum impact of training it is essential that the training objectives match with the expectations of the trainee and with the needs of the user system of training. After getting acquainted with the requirement of the trainees, the trainer need to be aware about the existing level of knowledge and skills, their attitudes, their potential in the organization and the degree of susceptibility of the group to accept and imbibe training.

ii. Content and Input Evaluation:

The next is that the trainer ought to have at least some information about the context in which the trainee has to work after training. Pre-training evaluation not only helps in identifying the training needs but also helps trainers to evaluate the inputs and its contribution to achievement of training objectives.

iii. Post-Training Evaluation:

Levels of evaluation after training are as follows:

Reaction Evaluation:

The participant’s impressions about the course in general and about immediate specific inputs are measured during and after the course.

a. Learning – Measure the degree of learning effected through training.

b. Job Improvements Plan (JIP) – Evaluation of the trainee’s improved performance immediately after training without yet providing him an opportunity to put training into practice is a poser to the evaluator.

c. Evaluating Transfer of Training to the Job – On the job evaluation would help assess the transfer of training to the job. Any changes, modifications or deletions required in the job improvement plan could be carried out through a debriefing session. This exercise helps increase receptivity to new ideas by the trainees immediate work environment.

d. Follow-up of evaluation – Evaluation at this stage involves monitoring and follow-up of the trainee’s performance to assess its contribution to the organization. It also helps in identifying specific areas where improvements have been effected and in evaluating outcome in cost benefit terms.

5. Bell System Approach:

A slightly different approach was developed as a result of a study at AT and T and the Bell System Unit.

The following levels of programme results, or outcomes were presented:

i. Reaction Outcomes.

ii. Capability Outcomes — Participants expected to know, do or produce by the end of the programme.

iii. Application Outcomes — Participants know, think, do or produce in the real world settings.

iv. Worth Outcomes — Value of training in relation to its cost.

The outcomes represent the extent to which an organization benefits from training in terms of the money, time, effort, or resources invested.

6. The Parker Approach:

Parker has divided the information studies into four groups:

i. Job Performance

ii. Group Performance

iii. Participant Satisfaction

iv. Participant Knowledge Gained.

Experimental Designs and Analysis:

The evaluation process should use an experimental design that indicate whether changes are due to the training or to some other cause.

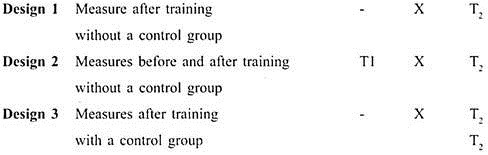

Pre-experimental designs are used quite often in the organizations but are in fact not acceptable. In presenting the designs, an X will represent the exposure of a group to training, T1 the pretest; T2, the post-test; and R, the random assignment of individuals to separate treatment groups.

Design 1 has no value at all. As no pretest was given prior to training so the changes in performance cannot be measured.

Design 2 allows us to assess changes but without a control group, one can never be sure of what brought about the changes.

Design 3 though uses a control group but is still weak.

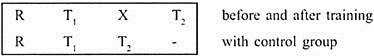

A recommended design is one that involves a training group and a control group, both of which are measured before and after training.

In this design the individuals are randomly assigned to the two conditions thereby achieving the equivalency of groups. This design controls the extraneous factors and can be used in most of the organizations.

Another design is the multiple Time-series design. In this design the trainees are randomly assigned to either the group that receives training or to the group that does not. A series of measurements are taken prior and subsequent to the time when training or no training occurs.

The main logic of this design is that if the performance of the trainee increased suddenly and remained high following training but remained at the same level in the control group after training, then one could come to a conclusion that the training programme did bring about some improvement in the performance of the trainee.

Many tests have been criticized as being too academic for use in business and industrial training activities. While some tests have their weaknesses, it can be said that testing works better than subjective judgement in decisions regarding the value of training.

Tests are value to the:

i. Instructor, because they supply one of the most important sources of information as to how well the instructor (as well as the trainee) is meeting the objectives of the unit of instruction.

ii. Trainee; assists in the diagnosis of the areas of difficulty, helps distinguish between the relevant and the irrelevant, and can provide incentives towards greater effort.

iii. Training management who use tests to assist in the assessment of the instructional personnel, teaching methods and materials and whether or not the training activities do further the attainment of the goals and objectives of the business.

iv. Top management because of the value test results have in preparing reports on the effectiveness of the entire training and development operation.

Daly raised four questions in the context of evaluation and answered the same as follows:

i. Why Evaluate? The trainees have to reveal improved performance as a result of training.

ii. What to evaluate? The ability of the instructor as well as methods to accomplish the given objectives.

iii. Who should evaluate? By all people involved or affected.

iv. When to evaluate? It should be a continuous process. Ideally it should start prior to training and end after several months of the programme.

As Bhatawdekar points out there is a need to evaluate sessions, topics, programmes as well as the training system. Evaluation should result in improvement in the training systems, functions and functionaries. If evaluation results in no action, it will be a wasteful exercise.

Evaluating Training Effectiveness – 5 Different Approaches: Kirkpatrick’s Framework of Four Levels Model, Jack Philip’s ROI Method and a Few Other Approaches

Some points you must remember while studying or evaluating training performance are as follows:

1. Observations, interviews, and questionnaires are means of gathering data related to training and not evaluating the training.

2. Work samples are sources of data used in evaluation.

Job performance is a generic term for individual and group performance measures. These are measures that might help one to evaluate, provided the right measures are adopted, and if one gets the right data, he/she will be able to make the right analysis.

There are different approaches to evaluate training, some of which are as follows:

1. Kirkpatrick’s framework of four levels model

2. Jack Philip’s ROI method (similar to but an extension of the Kirkpatrick model)

3. Stufflebeam’s CIPP model (context, input, process, and product model)

4. Warr et al.’s CIRO model (context, input, reaction, outcome model)

5. Virmani and Premila model.

1. Kirkpatrick’s Framework of Four Levels Model:

Kirkpatrick first published his ideas in 1959, in a series of articles in the US training and development journals. Over a period of time, the model has gained international acclamation and is now considered as a standard across the HR and training communities. The model suffices the needs of industrial and commercial organizations for training and learning evaluation aimed at ongoing people for development, planning, and managing training. Kirkpatrick (1998) himself redefined and updated his four levels of training evaluation model.

The four levels of Kirkpatrick’s evaluation model essentially measure reaction, learning, behaviour, and results. Evaluation at the reaction level helps to understand what the trainees think and feel about the training, and their views about the training programme. This level measures the learner’s perception (reaction) of the course.

Evaluation at the learning level attempts to answer the question, ‘did the participants learn anything?’ The evaluator finds what the trainees have learned from the training programme, and the resulting increase in knowledge or capability. The evaluation also includes their attitudinal changes, knowledge improvement, and enhanced skills. The learning evaluation requires post training testing to ascertain the extent of learning.

Evaluation of behaviour at the third level attempts to measure the effect on the job performance, or the extent of behavioural modification and capability of improvement, knowledge enhancement, or application in the work performance. However, you have to keep in mind that behaviour is the action which is exhibited, while the final results of the behaviour are the performance.

This evaluation involves testing the trainees’ capabilities to apply the learned skills on the job at the work place, rather than in the classroom. You can perform third level evaluation formally (by testing) or informally (through observing). The evaluation will help you to determine if the performance is correct by answering the question, ‘do people use their newly acquired knowledge on the job?’

Learning new skills and knowledge is no good to an organization unless the participants actually use them in their work activities.

Evaluations at the preceding three levels—reaction, learning, and behaviour—are focused on the individual trainees. The fourth level—result evaluation—is directed towards measuring the contribution of the training efforts on the organizational performance.

Evaluation at this level answers the question, ‘what impact has the training achieved?’ The effects of the training programme on the business or environment resulting from the trainee’s performance is measured at this ‘behaviour evaluation’ stage. These impacts can include such items as monetary, efficiency, moral, teamwork, etc.

Sometimes you may find it difficult to isolate the results of a training programme but you can definitely link contributions of training to organizational improvements. Data collecting, organizing, and analysing the data, and converting it into information can be difficult, time-consuming, and more costly than the other three levels, but the results are often worthwhile when viewed in the full context of its value to the organization.

2. Jack Philip’s ROI Method:

Phillips’ return on investment (ROI) approach is another internationally-acclaimed method to evaluate training and is endorsed by organizations such as The American Society of Training and Development (ASTD). This approach enables organizations to calculate the financial ROI from the training event.

Phillips (1997) added a fifth level to the Kirkpatrick model. He has included the same in his book Return on Investment in Training and Performance Improvement Programmes. Some experts argue that Kirkpatrick’s model does not address anything about the worth of the training.

After adding the fifth level, Jack Phillips (1997) called the Kirkpatrick approach, the ROI or return on investment, to get an answer to the question, ‘did the training pay for itself?’ Can the effort, time spent, and expenditure incurred for the training be justified? If it can, then the expenditure will be treated as an investment to enhance the potential of human assets.

Phillips’ introduced the fifth level for the first time, considering the need for the evaluator to appreciate the finer workings of the organization and also employ some skill in determining costs and benefits.

3. Stufflebeam’s CIPP Model:

Daniel Stufflebeam developed the CIPP (context, input, process, and product) model of training evaluation in the 1960s. Obviously, from its name, it can be presumed that this model requires the evaluation of context, input, process, and product in judging the value of a programme. Stufflebeam developed this model as a means of linking evaluation with programme decision-making.

A training cycle comprises planning, structuring, implementing, reviewing, and revising decisions. The CIPP framework provides an analytical and a rational basis for programme decision-making conforming to the cycle. It is a decision-focused approach to evaluation. This approach emphasizes on the systematic provision of information for programme management and operation.

The information resource is viewed as the most valuable in this approach, as information helps programme managers to make better decisions. As such, evaluation activities should be planned to fulfil the decision needs of the programme staff. Obviously, a programme can always change during execution. Accordingly, evaluation activities should also be able to adapt to meet these changing needs as well as ensuring continuity of focus.

The model further provides an analytical and a rational basis to evaluate all components of the training cycle for programme decision-making. This is based on a cycle of planning, structuring, implementing, and reviewing and revising decisions, each examined through a different aspect of evaluation—context, input, process, and product evaluation.

In order to the make the evaluation process successful, an organization should consider the following aspects of evaluation and take decisions. Gathering responses to some questions in this regard can also be helpful.

4. Warr, et al.’s CIRO Model:

Warr et al (1970) had propounded the CIRO model. It still remains one of the most widely used training evaluation models. The model strikes a chord with some of the key issues that trainers are still grappling with today—how to demonstrate the impact of training on organizational objectives, and in turn, how to make a strong business case for training.

In the CIRO evaluation approach, there are four components (context, inputs, reactions, and output) of evaluation and the evaluation can occur at two levels—at the organizational level and a single event level, such as a training programme. At the organizational level, the purpose of evaluation is to measure the success of the employee development strategy. This specifically assesses whether the planned objectives have been achieved. Employee development strategies depend on the size and the industry to which it belongs.

The basic purpose of training at organizational levels including induction programmes may be different. Whereas the aim of a large-scale industry may be to look at the wealth of schemes, that of a small-scale company may be its mere survival. Usually, it is advised that any programme that requires huge resources such as management time and cost should be regularly evaluated.

At the single event level, the purpose of evaluation is to assess how one particular event fits into the overall training cycle (business needs, identifying training needs, specifying training needs, translating training needs into actions, planning the training, and finally evaluating the training). This is a series of sequential steps that businesses need to go through in order to deliver effective training and development.

If a trainer adopts the CIRO approach to evaluate training, he/she will get the opportunity to follow a model while conducting training and development assessments.

The trainer should conduct training evaluation in the following areas:

C – Context or Environment within which the Training Takes Place:

The training management must objectively identify training needs through man, task, and organizational analysis. Here, evaluation is directed to pinpointing the reasons for the training or development event, and the system or strategy of need identification.

I – Inputs for the Training Event:

This refers to evaluation at the planning and design processes, which leads to the selection of trainers, programmes, pedagogy, employees, and materials. It involves rethinking and determining the appropriateness and accuracy of the inputs, which are crucial to the success of the training or development initiative.

If, for example, someone, without any flair and aptitude for teaching, attends a programme on ‘training for trainers’, they are likely to cause hindrance to the learning process. It is not just they who will not learn; they are likely to jeopardize the environment, ultimately leading to waste of time and money for the organization.

R – Reactions to the Training Event:

The method of evaluation at this stage should be appropriate to the nature of the training undertaken. The management may want to measure the reaction of learners and assess the relevance of the training course. The assessment is directed to the content and presentation of the training event, to evaluate its quality.

O – Outcomes:

The management may desire to measure the extent of learning transferred to the workplace. This is easier when the training is concerned with hard and specific skills.

For example, consider that a machine operator is trained on machine technology, selecting speed, feed and depth of cut relating to the material to be machined. Here, one can measure the outcome of the training programme from the average number of components produced over a period.

Thus, the records of the pre-training and post training performance levels of the learners are the relevant objective evidences. The average production before and after training will directly indicates the outcome. However, softer and less quantifiable competencies including behavioural skills, cannot always be measured.

A key benefit of using the CIRO approach is that it ensures coverage of all aspects of the training cycle.

5. Virmani and Premila Model:

In a study, Jiwani (2006) desired to find out the most effective training methods that help in ensuring learning and its transfer to real life situations based on Virmani and Premila’s model of training evaluation. He based the training design of the programme on the research done by World Health Organization on the skill sets required to succeed in a competitive society.

The broad objective of the programme was to equip the students with the skills required to deal effectively with the demands and challenges of the global environment. Jiwani (2006) mentions the three stages proposed by Virmani and Premila.

Stage I – Pre-Training Evaluation:

At this stage, he sent pre-training questionnaires to the trainees 15 days prior to the programme. Then, he discussed the components of the programme at the pre- programme meet. The objectives of the pre-training evaluation were – (a) to ascertain the expectations of the trainees, and (b) to ensure goal congruence of objectives between the trainer and trainees.

Stage II – Context and Input Evaluation:

At this stage, he used the collated data of the pre-training evaluation questionnaire and documents of the pre-programme meet. He attempted to understand the background of each participant, and to get a direction of the pedagogical tools relevant to achieve the set objectives.

Stage III – Post Training Evaluation:

He embarked on the reaction evaluation and learning evaluation processes. The objectives were to capture the immediate feelings of the trainees with reference to certain parameters.

The manner in which Jiwani (2006) used Virmani and Premila’s model is elucidated.